Vision AI

Automation testing has advanced rapidly, with automation tools now integrating sophisticated AI techniques to optimize the testing process. Traditional automation focused on running predefined scripts based on fixed test cases. However, AI has introduced a layer of intelligence to testing tools, using machine learning, natural language processing (NLP), computer vision, and other AI methods to make testing smarter, quicker, and more effective.

One of these AI technologies, Vision AI, helps systems interpret and understand visual information. Vision AI can extract text from images and perform actions based on this information, adding significant power and flexibility to automated testing.

In this article, we will discuss Vision AI in more detail, highlighting its core features and how testRigor leverages this technology to greatly improve the quality of applications.

What is Vision AI?

Vision AI is the use of artificial intelligence, specifically computer vision, to aid in testing software applications by enabling machines to interpret visual elements like images, buttons, icons, and text: similar to how a human tester would. Vision AI has proven especially valuable for testing software with visually rich interfaces, such as mobile apps, games, and applications that rely on graphical user interfaces (GUIs).

The Role of Vision AI in Test Automation

Traditional automation relies heavily on locators like XPath, CSS selectors or IDs to identify and interact with UI elements. While this works well in many cases, it becomes challenging when dealing with dynamic content, frequent UI changes or visually complex elements. Maintaining scripts in these cases can be difficult, as even minor UI changes can break tests, requiring frequent manual updates.

Vision AI overcomes these challenges by “seeing” the application in a way similar to that of a human. Instead of depending solely on code-based locators, Vision AI uses computer vision algorithms to identify and interact with UI components based on their visual attributes, such as shape, size, color or position. This makes the tests more resilient to changes, reducing the need for constant maintenance.

Vision AI: Top Features

Let’s discuss the key features of Vision AI that make it so valuable in the industry.

Visual Recognition and Analysis

Visual recognition is one of the foundational features of Vision AI, allowing it to identify and interpret various elements within a user interface (UI) as a human tester would.

- Component Identification: Vision AI can recognize a range of UI elements, such as buttons, text fields, icons, menus, and images, across different screen layouts. This ability is particularly useful for testing applications with complex or visually rich interfaces, including mobile apps, games, and modern web applications.

- Visual Defect Detection: Vision AI can identify visual defects, such as missing elements, incorrect placements, or inconsistencies in UI layout, that traditional code-based locators might miss. This visual inspection allows it to spot issues that could affect the user experience, like misaligned buttons or overlapping text fields.

- Contextual Understanding: Vision AI has contextual understanding capabilities besides recognizing individual elements. It can comprehend the purpose of UI components by analyzing their spatial relationships and design. For example, it might recognize a “Submit” button and understand its functional role in completing a form, just as a human would.

Object Detection and Localization

Object detection and localization allow Vision AI to identify specific elements or objects on a screen and determine their precise location.

- Pattern and Shape Recognition: Using advanced computer vision models, Vision AI can go beyond simple pixel matching and recognize elements based on patterns, shapes, and structures. For instance, it can detect a “Submit” button even if it undergoes minor changes in shape, size, color or position, challenges that would typically break traditional automation scripts.

- Dynamic Element Handling: This feature is especially valuable for applications with dynamic UI components, where elements may frequently change in appearance or location. Vision AI can adapt to these changes, improving test resilience and reducing maintenance effort.

- Position Accuracy: Object localization enables Vision AI to find the exact position of UI elements, allowing it to interact precisely with objects as they appear on the screen. This ensures tests are accurate even when dealing with UIs that have shifting elements.

Optical Character Recognition (OCR)

OCR is a key feature that allows Vision AI to read and extract text from images, screenshots or other visual content within the application interface.

- Dynamic Text Validation: OCR makes it possible for Vision AI to validate dynamic text content, such as notifications, user messages or data displayed within the app. For instance, it can read user-generated text or error messages to ensure that they appear correctly.

- Localization Testing: OCR is particularly useful for testing applications in multiple languages. By extracting and verifying text in different languages, Vision AI helps ensure that translations are accurate, free of errors, and that they don’t disrupt the UI by causing issues like text truncation or overlap.

- Document Testing: OCR can also validate content within document formats (like PDFs) embedded in an application, ensuring that text within these documents is displayed correctly and can be read as intended.

Image Comparison and Visual Testing

Image comparison is a powerful Vision AI feature that enables visual regression testing by comparing the current UI state to a baseline image, identifying any visual discrepancies.

- Pixel-by-Pixel Comparison: Vision AI can perform detailed pixel-by-pixel analysis to spot any minor changes, ensuring that even subtle modifications in layout, color, font or graphics are detected.

- Semantic Comparison: Besides pixel-based analysis, Vision AI can use advanced algorithms to detect meaningful changes, distinguishing between critical and non-critical visual differences. This makes it more robust in focusing on significant alterations, such as changes that impact user functionality, rather than minor aesthetic adjustments.

- Consistent Visual Standards: Image comparison ensures that visual standards are consistently applied across all screens and pages, helping maintain a cohesive look and feel throughout the application.

Self-Healing Capabilities

Self-healing is a feature in Vision AI that allows automated tests to adapt to changes in the UI, reducing the need for constant script maintenance.

- Automatic Element Adjustment: When Vision AI detects that an element has been modified, such as a button’s location, name or appearance, it can automatically update the test to accommodate this change. This adaptive capability is invaluable in environments where UI changes are frequent.

- Reduction in Maintenance Overhead: Self-healing minimizes the time and effort required to maintain test scripts. When minor UI changes occur, Vision AI can adjust automatically, reducing the need for manual updates and minimizing test disruptions.

- Improved Test Resilience: Tests with self-healing are more robust, less prone to breaking, and more stable over time, leading to greater reliability and continuity in the testing process.

Screen Navigation and Interaction Automation

These capabilities allow Vision AI to simulate user actions based on visual cues rather than relying on static locators or HTML attributes.

- Contextual Interaction: Vision AI can simulate user interactions, such as mouse clicks, typing, swipes or keystrokes, by identifying elements visually on the screen. For instance, it can recognize and click a button by its appearance rather than its code-based properties, such as its ID or class.

- Platform Versatility: This feature is especially beneficial for applications with dynamic UI elements or those that span multiple platforms (e.g., web, desktop, mobile). Vision AI’s interaction automation supports consistent behavior across different environments, improving test coverage across devices.

- Gesture Automation: Vision AI can simulate various user gestures, like scrolling or dragging, making it ideal for mobile testing, where swipe and touch interactions are common. This capability allows Vision AI to navigate and test mobile UIs as a human user would.

AI-Powered Exploratory Testing

This feature allows Vision AI to autonomously explore the application’s UI, interacting with different elements to identify potential defects or areas that require further investigation.

- Autonomous Navigation: Vision AI can independently navigate through different parts of an application, examining each element it encounters. It interacts with components, performs actions, and notes any issues or inconsistencies for further analysis.

- Prioritization of Critical Test Areas: Leveraging data from past test executions, user behavior and historical defect patterns, Vision AI prioritizes certain areas of the application to focus on, improving test efficiency and ensuring critical functionalities are covered.

- Learning from Test Results: Vision AI’s exploratory testing capabilities improve over time by analyzing previous test outcomes and user interactions. This learning ability enables Vision AI to refine its focus and become more effective in identifying high-risk areas in future test cycles.

Practical Applications of Vision AI in Testing

Let us review a few use cases where Vision AI is helpful in software testing:

End-to-End UI Testing

Vision AI enhances end-to-end testing by validating entire workflows from a real user’s perspective. It identifies and interacts with UI elements, such as buttons, text fields, and menus, ensuring they function as expected across the application. This type of testing helps detect visual and functional inconsistencies that might affect the user journey, like broken buttons or misplaced elements. Vision AI also checks for consistent behavior and layout across multiple screens and devices, providing a seamless experience. By simulating real user actions, it confirms that all aspects of the UI deliver an intuitive experience.

Regression Testing

This testing ensures that new code changes don’t inadvertently break existing functionality or affect the UI. Vision AI automates visual regression checks by comparing the current UI with baseline images to detect any unexpected changes in appearance, layout or color. This is particularly useful in catching subtle design shifts that may have been introduced by code modifications. Vision AI’s detailed comparisons allow it to identify even minor discrepancies, like font changes or pixel misalignment. With automated visual regression, Vision AI reduces the time and effort needed for manual checks, enhancing testing speed and accuracy.

Cross-Browser and Cross-Device Testing

Vision AI verifies that an application looks and functions consistently across different browsers and devices. It checks for layout issues, rendering inconsistencies, and compatibility problems that may arise on specific platforms, such as mobile versus desktop. By validating the appearance and behavior of UI elements across different screen sizes and resolutions, Vision AI ensures a uniform experience. This is crucial in today’s multi-device environment, where users expect applications to perform the same across various devices. Vision AI also helps identify issues like misaligned buttons or font discrepancies unique to particular platforms.

Accessibility Testing

Accessibility testing with Vision AI ensures that applications are usable for people with special needs. Vision AI checks for compliance with standards like WCAG, validating visual elements such as color contrast, font sizes, and alternative text for images. By automating these checks, Vision AI helps detect accessibility issues that could hinder the usability of the application for those with visual or motor impairments. This process ensures that UI components are accessible via keyboard navigation, follow a logical focus order, and are visible to screen readers. Vision AI’s automation of accessibility checks helps organizations improve inclusivity and meet regulatory requirements.

Localization Testing

Vision AI assists in localization testing by verifying that text and UI elements display correctly in various languages. With its OCR capabilities, it can check for accurate text translation and ensure the layout remains visually intact across languages with different text lengths. This is essential for maintaining a consistent user experience and ensuring that localized text doesn’t overlap or truncate inappropriately. Vision AI’s automated checks help avoid display issues caused by cultural and language variations in text, images, or symbols. It ensures the application’s UI adapts to different languages without affecting readability or functionality.

Game Testing

Vision AI streamlines game testing by validating visual components, animations, and interactive elements in games. It ensures that characters, backgrounds, and other graphical elements render correctly and respond smoothly across devices. Vision AI can identify visual glitches, inconsistencies, or performance issues that could affect the player’s experience. Additionally, it verifies that in-game UI components, like health bars and menus, are positioned accurately and work as intended. Automated testing of these visual and interactive aspects ensures a seamless and enjoyable gaming experience for players.

AR/VR Testing

AR and VR applications require precise testing of visual elements to ensure immersive experiences, and Vision AI helps by validating the alignment and responsiveness of virtual objects. It checks that virtual items align correctly with real-world surfaces and respond accurately to user movements and gestures. This testing ensures that AR/VR elements are visually accurate, preventing issues like misalignment or lag that could disrupt immersion. Vision AI also verifies that virtual objects maintain consistency across different devices and environments. By ensuring visual precision, Vision AI contributes to delivering a high-quality experience in AR/VR applications.

AI-Powered Exploratory Testing

Vision AI enhances exploratory testing by autonomously navigating the application’s UI and interacting with elements to uncover potential issues. It tests workflows in a way similar to a human tester, identifying UI inconsistencies, broken links, or other usability problems that scripted tests may overlook. By learning from previous test outcomes and user behavior, Vision AI can prioritize high-risk areas and adapt to new interactions within the application. This approach enables Vision AI to uncover unexpected issues that might not be addressed in predefined test cases. Its autonomous navigation helps broaden test coverage and increase defect discovery in complex applications.

How Vision AI Transforms Test Automation

The benefits highlighted below highlight how Vision AI enhances the efficiency, reliability, and accuracy of automated testing, helping teams deliver high-quality applications with improved user experiences.

Improved Test Coverage

Vision AI enables comprehensive test coverage by focusing on both functional and non-functional aspects of the user interface (UI). Traditional automation may miss visual defects that affect the user experience, but Vision AI’s ability to recognize, interpret, and interact with visual elements means it can catch these issues. It thoroughly tests the UI for layout, design, and functional consistency across multiple devices and platforms. This added layer of validation helps ensure that every aspect of the application meets quality standards, providing a more reliable and polished user experience.

Reduced Test Maintenance

With Vision AI’s self-healing capabilities, automated test scripts become more adaptable to UI changes. When minor modifications occur, such as changes in element positions, names or styles, Vision AI can automatically adjust test scripts without manual intervention. This reduces the effort spent on maintenance, a significant advantage in agile development environments where the UI often changes. By lowering the need for constant updates, Vision AI minimizes maintenance overhead and allows testers to focus on more critical testing tasks, improving testing efficiency.

Enhanced Test Resilience

Traditional test automation is prone to breaking when faced with even small UI changes, leading to frequent script failures and false positives. Vision AI provides enhanced resilience by using visual recognition rather than relying solely on code-based locators like XPath or CSS. This means tests are less likely to break from minor UI modifications, such as style adjustments or element repositions. By making automated tests more stable and reducing false failures, Vision AI improves the reliability of test results, leading to more accurate quality assessments.

Accelerated Testing Process

Vision AI speeds up the testing process by automating the recognition, validation, and interaction with visual elements on the UI. It can quickly identify changes, anomalies, or inconsistencies, providing faster feedback to development teams. This is particularly beneficial in Agile and DevOps workflows, where continuous testing and rapid iteration cycles are essential. By reducing manual testing time and accelerating test execution, Vision AI enables quicker releases without compromising on quality, helping teams meet tight deadlines more effectively.

Human-like Testing

Vision AI mimics human perception, making it especially effective for user-centric testing. It interprets the UI based on visual cues, just as a human tester would, allowing it to validate elements for usability, aesthetics, and overall user experience (UX). This human-like testing capability enables Vision AI to focus on visual factors that affect user satisfaction, such as layout harmony, readability, and intuitive navigation. By simulating real user interactions, Vision AI helps ensure that the application is visually engaging and accessible, enhancing UX.

Greater Flexibility and Scalability

Vision AI offers flexibility in testing different types of applications across a wide range of devices and platforms. It supports various testing scenarios, from web and mobile applications to AR/VR environments and complex gaming interfaces. Vision AI’s ability to adapt to different UIs makes it scalable, allowing it to grow with the application’s needs and expand test coverage as the app evolves. This flexibility and scalability are particularly valuable for applications with diverse visual elements, providing a versatile solution that can keep pace with changing requirements.

Vision AI Integration in testRigor

testRigor is a generative AI-powered test automation tool that employs diverse AI technologies to simplify and stabilize test automation. NLP in testRigor is mainly used to enable testers or any other stakeholders to write very stable automation scripts in plain English. testRigor additionally uses generative AI to produce test scripts or even test data according to the user’s test case specification. With Vision AI, testRigor enhances the strength and quality of its test automation abilities.

Let’s see the areas where testRigor uses Vision AI to enhance test coverage and application quality:

-

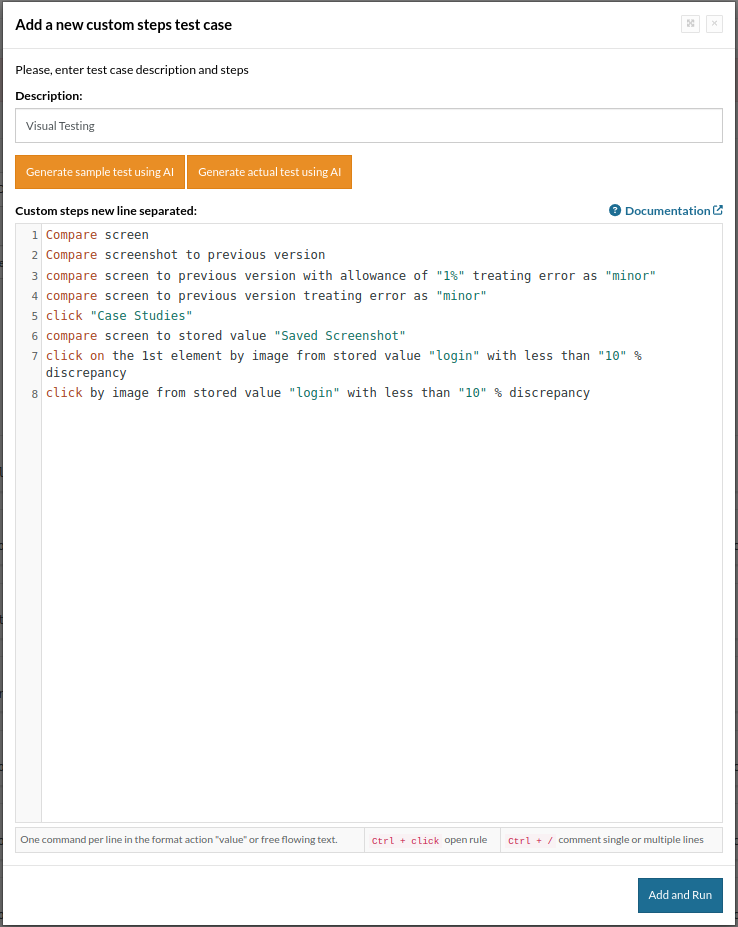

Visual Testing: With the support of Vision AI, testRigor makes visual testing straightforward. You can perform this in a single step using the “compare screen” command. Alternatively, you can capture a screenshot and save it as test data, then compare it with future test runs to detect any visual changes on application pages. This adds an extra layer of validation, ensuring consistency across the UI and enhancing overall accuracy in the testing process.

- Automatic Element Detection: Vision AI identifies UI elements based on their appearance, facilitating interactions even when elements change position, size, or styling. Users can reference elements by name or position in plain English, and testRigor will locate and perform the desired action.

- Optical Character Recognition (OCR): This feature allows testRigor to read and extract text from images or screenshots, enabling validation of dynamically rendered textual content within the application.

- Self-Healing Capabilities: Vision AI enhances test resilience by adapting to UI changes. If an element is modified or relocated, testRigor’s AI can adjust the test script accordingly, reducing the need for manual updates and minimizing maintenance efforts.