Generative AI In Software Testing

Generative AI is one of the most creative aspects of artificial intelligence. It involves creating new content or data based on learned patterns from existing data. This technology is used in software testing to automate complicated tasks that usually need manual intervention, like developing varied test cases, creating actual test data, and predicting user behavior patterns.

What is Generative AI in Software Testing?

Generative AI came to light as a subfield of artificial intelligence that can create content similar to the properties of training data. In software testing, this has transformed the testing processes as tests can now be created that are quite adaptive to the changing codebases and application updates.

Unlike the conventional approach, where QA engineers design tests manually using a given set of requirements, generative AI makes these processes exponentially more efficient. Generative AI creates tests that are both resilient and adaptable. It analyzes large datasets, understands user interactions, and adapts to real-time changes. This change reflects the more extensive industry trend of CI (continuous integration) and CD (continuous delivery) that drive the need for quicker and more agile testing solutions.

For instance, when a banking application is modified with new features, a generative AI could create new test cases directly, with the result that the test cases have been validated, without needing a QA engineer to review the application requirements for the test cases and write test scripts. This ability for dynamic test generation permits a testing scale and depth previously only dreamed of.

Why Generative AI is Critical?

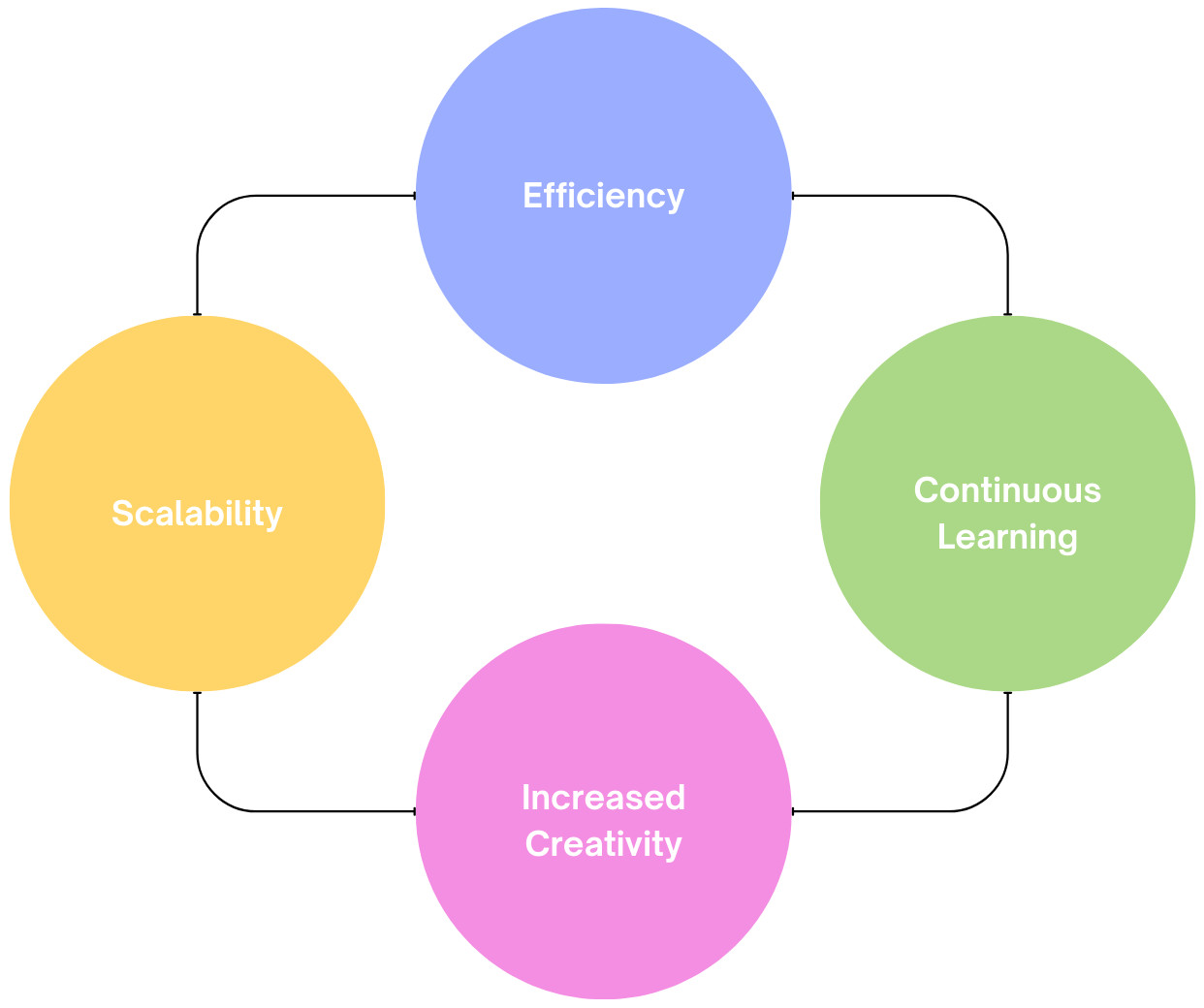

The increasing complexity of applications, user expectations for seamless experiences, and the shift towards Agile and DevOps practices have amplified the need for more efficient and thorough testing strategies. Generative AI offers numerous advantages:

- Scalability: AI-driven tools can handle large volumes of tests, automatically creating scenarios based on user patterns or expected outcomes. This is particularly important for applications requiring extensive validation across user types, devices, or geographic locations.

- Efficiency: Generative AI reduces the time it takes to create and maintain test scripts, which is especially valuable for Agile or DevOps teams focused on quick release cycles.

- Continuous Learning: Generative AI can adapt and improve over time by analyzing previous tests and outcomes, making it suitable for continuous testing environments.

- Increased Creativity: Generative AI allows testers to find paths and scenarios they might not have anticipated. This leads to a more comprehensive test coverage and ultimately reduces the risk of production issues.

Applications of Generative AI in Software Testing

Generative AI’s applications in software testing are diverse, addressing many common challenges faced by QA teams today. Below, we explore some of the most impactful applications in detail.

Test Data Generation

It is one of the most labor-intensive aspects of software testing. Generative AI simplifies this by creating realistic data tailored to various testing needs.

- Database Testing: Generative AI could generate large amounts of structured data for testing databases against various loads. A healthcare application, for example, may require thousands of sample patient records to test properly. These records can be made up of multiple types of data points like demographics, medical history, and treatment plans. They can be generated using generative AI without ever using real-world sensitive data.

- Form Validation and Input Data: Most applications use forms for inputting data. Generative AI can automatically fill these forms with various types of data, including invalid data, and helps you perform black box testing on how the applications react to invalid data or when they receive unexpected inputs.

- Creating Complex, Structured Data: Many applications in finance or insurance naturally require complex data, such as nested records or lists. Generative AI comes into play here to model this complexity and generate data that looks like the actual records from the real world. This allows QA teams to imitate realistic situations that would be very challenging to recreate manually.

In practice, data generation via AI creates scenarios for automated testing that are close to reality. A banking application would need a whole bunch of user profiles, transaction histories, and account types in order to be thoroughly tested. It can create such test data sets with different attributes that provide complete test coverage using Generative AI.

Automated Test Case Generation

One of the most powerful applications of generative AI is its ability to generate test cases automatically, saving teams from writing test scripts manually.

- Functional Test Case Generation: Generative AI can map the functionalities of an application and create test cases that verify if each function behaves as intended. In an e-commerce platform, for instance, particular scenarios such as adding items to cart, applying discounts and checkout can be targeted to auto-generate test cases.

- End-to-End Scenarios: Not just limited to functions, generative AI can also map multiple user actions to end-to-end workflows. This proves immensely useful for applications where users integrate test cases functioning together like travel booking applications, where the users browse, search for flights, book hotels and make travel arrangements in one shot.

- Exploratory Testing: AI-based exploratory testing empowers AI to autonomously explore an application and find paths or connections that one may not have initially created in mind. This is intended to aid the QA teams in identifying possible deficiencies in the UX or functional flow of the application.

It’s especially helpful for Agile teams as they can test continuously without manually creating test scripts every time. In a financial application, for instance, generative AI could automatically generate new tests whenever a feature is added or modified, guaranteeing that the relevant paths are tested on every release.

Scenario Simulation and Complex Use Cases

Generative AI can simulate complex user scenarios and workflows, capturing rare interactions that manual testers may overlook.

- Simulating User Behavior: Generative AI analyzes actual user data; identifying common paths that users take in an application and also rare edge cases. Hypothetically, AI could emulate a user who goes through an application, completes some portion of a task, then leaves, and later comes back and needs to finish the task. These simulations are useful to emulate the user journey, know if the application is working in the way it should, in many conditions, and provide complete confidence in the final release.

- Load and Performance Testing: Generative AI can simulate a range of application loads, identifying any performance issues. AI can generate test scenarios for high-traffic events, such as an e-commerce sale on Black Friday, to check how the application performs under such load.

- Discovery of Edge Cases: Edge cases, including obscure error states or out-of-the-norm user behavior, can be challenging to predict through manual testing. Thanks to generative AI, a diverse set of inputs and scenarios can be generated, pushing the limits of the system and building robustness.

QA teams can test for conditions that occur only infrequently in production but can have dire consequences when they cause the application to fail with these simulations. Generative AI helps teams recognize such problems early on and address them.

Generative AI vs. Traditional Test Automation

While traditional automation has been the backbone of software testing for years, generative AI provides a paradigm shift by introducing adaptive, creative test generation capabilities.

How Traditional Automation Falls Short

In traditional automation, the QA engineer builds scripts that tell the application how it should be tested. This might be the right approach for predictable and repetitive tasks, but it can be slow and problematic if an application changes frequently. In a UI-rich application, a small change in the UI can lead to several hundred broken scripts, which need to be fixed manually and are very time-consuming.

How Generative AI Resolves These Challenges

In contrast, generative AI does not use static scripts. Rather, it discovers trends, remembers previous tests, and can generate new conditions by learning from those patterns. This becomes particularly important in Agile and DevOps environments, where software changes more often. As generative AI keeps evolving on its own, QA engineers do not need to keep updating the scripts.

This generative AI translates into deeper and wider test coverage and flexibility from a practical perspective, helping teams gain greater confidence in the quality of applications. For example, a SaaS business that continually develops its platform may be using generative AI to loop-monitor and test each update, ensuring seamless product delivery.

The Role of Vision AI and NLP

Generative AI can perform advanced testing by integrating with Vision AI and Natural Language Processing (NLP), allowing it to handle tasks that require an understanding of visual or text-based content.

NLP for Test Case Generation and Requirement Analysis

By utilizing NLP, generative AI models can process and understand various forms of textual data like user stories, requirements, and bug reports. Such functionality becomes particularly effective when you need to convert high-level requirements into low-level test cases.

- Requirement Parsing: NLP can analyze requirements documents and identify keywords and phrases that detail what the application is supposed to do. It then generates test cases that ideally cover all possible expected outcomes and user interactions.

- Creating Complex Scenarios: By understanding the nuances in language, NLP-based generative AI can create a wide range of test cases, including edge cases that manual testers might overlook.

- Simplifying Test Creation for Non-Technical Users: Thanks to NLP, non-technical users can communicate with the testing tool in normal human language, enabling business analysts or project managers to participate right at the design level of tests.

For instance, an online learning platform that needs course instructors and students to send different forms of interaction back and forth could use NLP-driven test generation. We can use NLP to convert requirements such as “A student shall be able to message an instructor” information into concrete test cases that confirm this functionality over many possible conditions.

Vision AI for UI and UX Testing

Vision AI, when combined with generative AI, allows for more comprehensive UI and UX testing by assessing the application’s visual components.

- UI Consistency: Vision AI can verify the application’s visual layout and check for mismatches in alignment, colors, or font sizes. If a developer accidentally shifts a button to a different location, Vision AI could flag that change and ensure it conforms to design standards.

- Cross-Device Testing: Vision AI can take screenshots across varying screen resolutions and devices and compare them to validate the responsive design of web or mobile applications.

- Image Recognition: For applications that rely on image recognition, such as the product image carousel in e-commerce applications, Vision AI guarantees that images are delivered properly and remain high quality across environments.

Generative AI tools offer comprehensive testing that goes beyond functionality, taking visual aspects into consideration with Vision AI to make sure visuals are up to standards across all user experience, device, and screen sizes.

How testRigor Uses Generative AI for Testing

testRigor uses generative AI, Vision AI, and NLP to enable efficient and adaptable testing for modern applications. This tool is especially suited for teams focused on low-maintenance, scalable, and user-friendly testing solutions.

Generative AI for Test Case and Data Preparation

Generative AI helps testRigor create new test cases and produce unique test data quickly.

- Test Case Generation: testRigor can generate test cases just through the provided test case description in plain English. Based on the description, the test case can be generated end-to-end. Users can also mention how many test cases need to be generated. This helps to create more edge cases that help to increase the test coverage.

- Test Data Generation: Different teams use the same test data, and because of that, the test automation provides flaky test results. testRigor uses generative AI to create unique test data. So, these will be specific to test cases and help ensure the automation results are correct.

NLP and Vision AI for Simplified Test Automation

By integrating Vision AI and NLP, testRigor allows users to create and modify tests without needing advanced technical skills. testRigor can automatically interpret and validate changes in the UI, adjusting tests without manual input from QA engineers.

- Plain Language Test Creation: With NLP, anyone from the project can create or modify test cases. With testRigor, you can create the test scripts in plain parsed English. NLP then processes these English sentences, helping testRigor perform the intended actions. For example, a project manager can add a validation to an existing test case like “check that button “Add to Cart” is disabled”. So, the NLP will process this statement for testRigor to execute.

- Automated UI Testing: testRigor uses Vision AI to recognize changes in UI elements, such as buttons, text fields, or images, and adjust tests accordingly. This is particularly helpful in dynamic web applications where UI elements change frequently.

Low-Maintenance and Adaptive Testing

testRigor’s generative AI capabilities mean that tests are low-maintenance and automatically updated as the application evolves. This reduces the workload for QA teams, who would otherwise need to update test scripts constantly.

For instance, in an Agile project with bi-weekly sprints, the application might change significantly between iterations. testRigor can adapt to these changes autonomously, maintaining test accuracy and reducing the burden on QA teams.

Empowering Non-Technical Users

testRigor’s approach to test creation via plain language makes it possible for stakeholders without coding knowledge to participate in the QA process. Business analysts or project managers can create test cases directly, bridging the gap between business requirements and technical testing needs.

Regression Testing with Minimal Manual Intervention

Regression testing ensures that new code changes do not introduce defects in previously functional areas. With testRigor, generative AI automatically updates regression tests as the application evolves, keeping the test suite relevant and accurate.

Comprehensive Exploratory Testing

Generative AI enables exploratory testing by autonomously navigating different paths within the application, identifying interactions that may not have been initially defined. This proactive testing approach allows QA teams to uncover and address issues that would have gone undetected otherwise.

Challenges for Generative AI in Software Testing

While generative AI offers many advantages, it also presents certain challenges.

Handling Edge Cases and Bias

AI models might miss specific edge cases without proper training data. testRigor overcomes this by using adaptive algorithms that learn from previous results, making it possible to improve accuracy over time.

Complex User Scenarios

Real-world applications often require complex, multi-step user flows that can be difficult for generative models to simulate effectively. testRigor lets you test email, file, databases, Captcha, 2FA, tables, maps, SMS, phone calls, audio, video, APIs, and many such complex scenarios in plain English commands.

Security and Privacy

Generative AI models may expose sensitive data or overlook security vulnerabilities due to a limited understanding of secure coding practices. testRigor is SOC2 and HIPAA compliant and supports FDA 21 CFR Part 11 reporting. testRigor understands your compliance requirements and helps you test them with its powerful features.

Future of Generative AI in Software Testing

Generative AI is expected to become increasingly integrated into software testing as its applications expand to new areas.

Expansion into Cybersecurity Testing

One of the most promising applications of generative AI in software testing is cybersecurity. Cybersecurity testing requires simulating malicious behaviors, detecting vulnerabilities, and assessing how well systems withstand attacks. Traditional approaches to security testing are limited by the creativity and time constraints of human testers. Generative AI, however, can create complex attack patterns that simulate real-world threats, allowing organizations to proactively identify and mitigate potential security issues.

IoT and Edge Device Testing

The rise of the Internet of Things (IoT) introduces unique challenges in testing, as these devices often operate in unpredictable environments and interact with other devices, networks, and platforms. Traditional testing methods struggle to account for the complexity and variability of IoT environments, but generative AI can help address these challenges by creating test scenarios that mimic real-world usage patterns and edge cases.

Adaptive Learning and Self-healing Tests

One of the most promising areas of generative AI in software testing is adaptive learning and self-healing tests. Currently, when an application’s interface or code changes, it often results in broken test scripts, requiring manual intervention to update the scripts. Generative AI, however, can enable self-healing tests by identifying changes and adjusting test cases accordingly.

Conclusion

Generative AI represents a significant leap forward in software testing. Its adaptability, ability to scale, and user accessibility make it invaluable for modern QA teams. By streamlining processes and improving test coverage, testRigor enables faster release cycles, higher-quality applications, and more efficient testing workflows, laying the foundation for the next generation of software testing.